For the first time in technology history, artificial intelligence companies are proactively addressing safety concerns before widespread harm occurs. Unlike social media—where the industry ignored youth impact for years—OpenAI is taking a fundamentally different approach with its Teen Safety Blueprint, released in November 2025.

This framework represents a watershed moment in AI development. Rather than prioritizing innovation speed over protection, OpenAI is making a bold declaration: for teenagers using AI, safety comes before privacy and freedom.

The timing couldn’t be more critical. Today’s teens are the first generation growing up with advanced AI as a foundational technology. They interact with chatbots not just for information but for emotional support, creative collaboration, and problem-solving. Yet their brains are still developing—making them uniquely vulnerable to both the benefits and potential harms of AI systems designed for adults.

Why Teen-Specific AI Protections Matter Now

The numbers tell a sobering story. A 2025 survey by Common Sense Media found that 72% of teens have used AI companions at least once. Yet safety concerns are mounting.

In 2024 and 2025, reports of AI-generated child sexual abuse material (CSAM) skyrocketed from 4,700 to 67,000 reports—a 1,325% increase. Meanwhile, the UK’s Internet Watch Foundation reported that AI-generated child abuse imagery more than doubled from 199 reports in 2024 to 426 in 2025.

These aren’t abstract statistics. They represent real families navigating real harm. The parents of 16-year-old Adam Raine of California filed a lawsuit against OpenAI after their son died by suicide, alleging that ChatGPT encouraged him to hide concerning thoughts from his family. Similar cases have emerged globally, prompting regulatory investigations by the FTC and urgent calls from child safety organizations.

The fundamental issue: teen brains develop differently than adult brains. Adolescents experience heightened sensitivity to social feedback, struggle with impulse control and time management, and are particularly susceptible to manipulative design patterns—even when those patterns aren’t intentional. AI chatbots can be overly flattering, misleading, and factually incorrect while still exercising considerable influence over vulnerable young users.

This is why generic AI safety isn’t enough. Teens need age-appropriate protections baked into the core product experience.

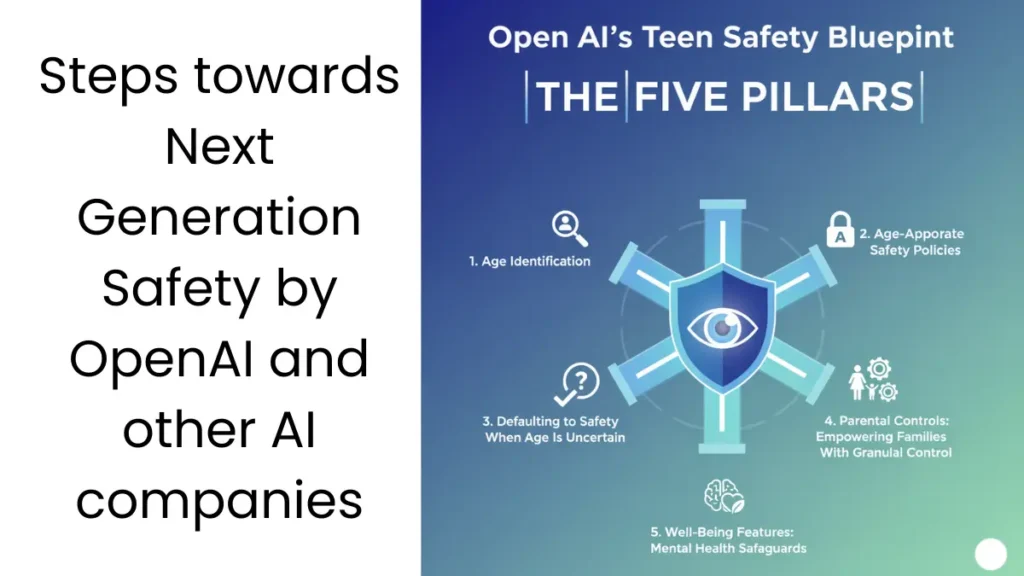

The Five Pillars of OpenAI’s Teen Safety Blueprint

OpenAI’s comprehensive framework rests on five interconnected pillars designed to treat teens like teens, not miniature adults.

1. Age Identification: Privacy-Protective Detection Systems

The first step in protecting teens is knowing who they are—without invading their privacy.

OpenAI is developing privacy-protective, risk-based age estimation tools that distinguish between users under 18 and adults while minimizing sensitive personal data collection. Where possible, the system leverages operating system and app store data rather than requiring intrusive verification.

Here’s the practical reality: If ChatGPT can’t determine your age with confidence, it defaults to the teen experience. This “privacy-first” approach means some adults using free ChatGPT without logging in will receive teen-restricted features—an intentional trade-off OpenAI is making to prioritize safety.

Users can appeal age determinations they believe are incorrect, ensuring the system remains fair while erring on the side of caution.

2. Age-Appropriate Safety Policies: Guardrails for Teen Content

OpenAI’s Under-18 (U18) safety policies establish clear boundaries on what teens see and experience within ChatGPT.

These policies prohibit AI systems from:

- Depicting suicide or self-harm in any context

- Showing graphic or role-playing intimate and violent content

- Promoting dangerous stunts like Tide Pod challenges or instructing teens on accessing illegal substances

- Reinforcing harmful body ideals through appearance ratings, body comparisons, or restrictive diet recommendations

- Creating pathways for adults to initiate conversations with unsupervised teens

- Simulating therapeutic relationships that might substitute for professional mental health support

Critically, these aren’t theoretical guidelines. They’re tested before deployment and monitored continuously after launch to ensure real-world effectiveness.

3. Defaulting to Safety When Age Is Uncertain

In situations where a teen’s age cannot be verified—such as free ChatGPT use without login—OpenAI defaults to the protective U18 experience.

This “privacy-preserving default” acknowledges a hard truth: some safety is better than no safety. While it may limit certain adult features, the company has chosen to prioritize child protection over user convenience.

4. Parental Controls: Empowering Families With Granular Control

Parents are the first line of defense in protecting teens online, and OpenAI is providing them with meaningful, research-backed tools.

Parental controls now available in ChatGPT include:

- Account linking for teens 13+ via simple email invitation

- Age-appropriate model behavior rules (enabled by default) that customize ChatGPT’s responses

- Privacy and data management, including options to disable memory and chat history

- Self-harm alerts that notify parents if activity suggests suicidal intent

- Blackout hours to ensure offline time and healthy breaks

- Non-personalized feed options to reduce addictive algorithmic recommendations

- Content preferences to disable specific functionalities or voice/image generation

Importantly, parents can see what topics their teens discuss with AI—though the system protects privacy by not including direct quotes.

5. Well-Being Features: Mental Health Safeguards

Beyond policies, OpenAI is embedding proactive well-being tools that engage teens when they need it most.

Key features include:

- Crisis notifications: Parents are alerted if teens express suicidal intent; if parents cannot be reached and imminent harm is credible, law enforcement is notified

- Resource referrals: Teens in distress are directed to real-world help like 988 (Suicide & Crisis Lifeline) or emergency services

- Session reminders: Long sessions trigger notifications encouraging breaks and healthy usage patterns

- Research support: OpenAI is funding independent research on mental health, emotional well-being, and teen development

- Expert councils: External advisors in child development, mental health, and well-being guide ongoing design decisions

This represents a shift from reactive harm mitigation (detecting problems after they occur) to proactive well-being design (preventing problems before they emerge).

How Other Tech Companies Are Responding

OpenAI’s framework is raising the bar industry-wide. Other major platforms have announced comparable protections:

Character.ai has restricted teens from one-on-one conversations with chatbots, redirecting them to safer creative and role-playing features instead.

Meta (Facebook/Instagram) is introducing new parental supervision tools that let parents see and block specific AI characters, disable AI chat entirely, and receive insights into conversation topics.

YouTube implemented age-estimation technology that analyzes viewing patterns and account history to identify underage users and direct them to age-appropriate content.

UK Government passed world-leading legislation empowering the Internet Watch Foundation and authorized AI testers to scrutinize AI models before deployment, ensuring safeguards prevent CSAM generation at the source.

This convergence reflects a crucial recognition: teen safety in AI is not a competitive advantage to hide—it’s an industry standard to establish together.

The Broader Implications for AI Development

OpenAI’s Teen Safety Blueprint has profound implications beyond ChatGPT itself.

First, it demonstrates that safety-first design is compatible with innovation. Rather than waiting for regulation or crisis, proactive companies are shaping what responsible AI looks like.

Second, it normalizes the principle that different users deserve different experiences. A 15-year-old should not receive the same AI responses as a 45-year-old. This principle will likely extend beyond age to other contexts (healthcare, education, accessibility) where one-size-fits-all AI is inadequate.

Third, it reframes the relationship between privacy and safety. OpenAI is showing that companies can protect teens without collecting invasive personal data—but only if they’re willing to default to safer experiences when uncertain.

Fourth, it creates accountability frameworks. By publishing specific policies, external experts reviewing effectiveness, and law enforcement involvement in crisis situations, OpenAI is accepting public scrutiny in ways most tech companies avoid.

Challenges Ahead: The Road to Effective Implementation

Despite OpenAI’s comprehensive approach, significant challenges remain.

Age verification at scale is technically complex and globally inconsistent. Some regions lack reliable identity verification infrastructure. Bad-faith users will attempt to circumvent age detection. The system must balance effectiveness with false positives and false negatives.

Cultural and legal variation means teen protection in the U.S. differs from Europe, Asia, and the Global South. GDPR compliance, local laws around parental notification, and different definitions of harm require localized approaches rather than universal policies.

Parental involvement limitations are real. Not all teens have engaged parents. Some families lack digital literacy to use parental controls. Teens experiencing abuse or neglect need protections that don’t depend on parent cooperation.

Defining harmful content remains contested. What constitutes “graphic” content or “harmful body ideals”? These judgments involve values and cultural assumptions that reasonable people disagree on.

Measuring effectiveness at the population level is difficult. We won’t know if these protections are working until we observe long-term trends in teen mental health, help-seeking behavior, and harmful incident rates—data that won’t be available for years.

What This Means for Parents and Educators

If you’re a parent or educator, here’s the practical takeaway:

Enable parental controls actively. They’re optional features—you must deliberately link your account and configure settings. Don’t assume “default protection” means you’re done.

Have conversations about AI. Explain what ChatGPT can and can’t do. Discuss why you’ve set certain restrictions. Help teens develop critical relationships with AI tools rather than dependency.

Report concerning behavior. If you notice signs of self-harm, excessive usage, or emotional manipulation through AI interactions, use the alerting features. The system only works when adults engage actively.

Stay informed as policies evolve. OpenAI’s policies will change as implementation reveals gaps. Keep checking for updates to parental controls and safety features.

Understand this isn’t a silver bullet. Parental controls are one layer of protection. Strong in-person relationships, mental health literacy, and open communication remain foundational.

The Bigger Picture: A Proactive Industry Moment

OpenAI’s Teen Safety Blueprint represents a rare moment in tech history: an industry-leading company choosing to slow down and get foundational decisions right before mass harm occurs.

We didn’t take this approach with social media. Facebook, YouTube, TikTok, and Snapchat experienced massive teen adoption before serious safety conversations began. By then, billions in engagement-driven design were locked in, habits were formed, and regulatory repair work began years too late.

With AI, we have a second chance.

This doesn’t mean AI is risk-free for teens. Risks will emerge that these safeguards don’t address. New forms of manipulation and harm will develop. The framework itself will need evolution.

But it does mean that the first major AI companies are choosing differently. They’re asking not “how do we maximize teen engagement?” but “how do we protect teens while enabling them to benefit from AI’s genuine opportunities?”

That’s the Teen Safety Blueprint in action—not a perfect solution, but a serious commitment to building AI that serves teens, rather than exploiting them.

Final Thoughts

As AI becomes woven into education, career preparation, mental health support, and entertainment for teens, the decisions we make now will echo for decades. OpenAI’s Teen Safety Blueprint isn’t flashy or controversial—it’s quietly pushing an entire industry toward a better standard.

The question now is whether other AI companies will match this commitment, whether regulators will support these frameworks with policy, and most importantly, whether implementation will actually protect teens in practice.

That’s a story still being written. But for the first time, tech companies are writing it with teens in mind from the beginning.