Have you ever wondered how we talk to computers? It is not magic, though sometimes it feels like it. We use special languages to give computers instructions. Think of it like teaching a very smart, very fast, but very literal robot a new language. The story of these languages is a human story—a story of brilliant minds solving incredible puzzles.

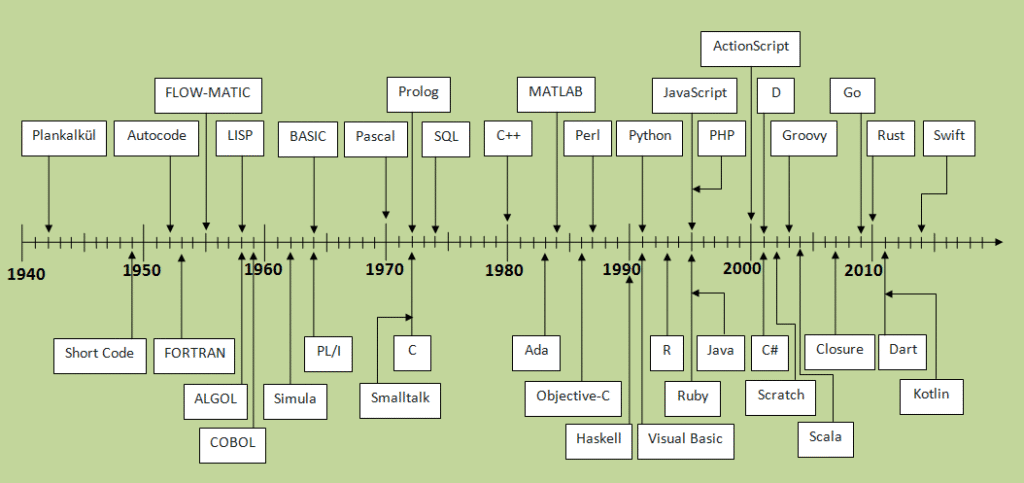

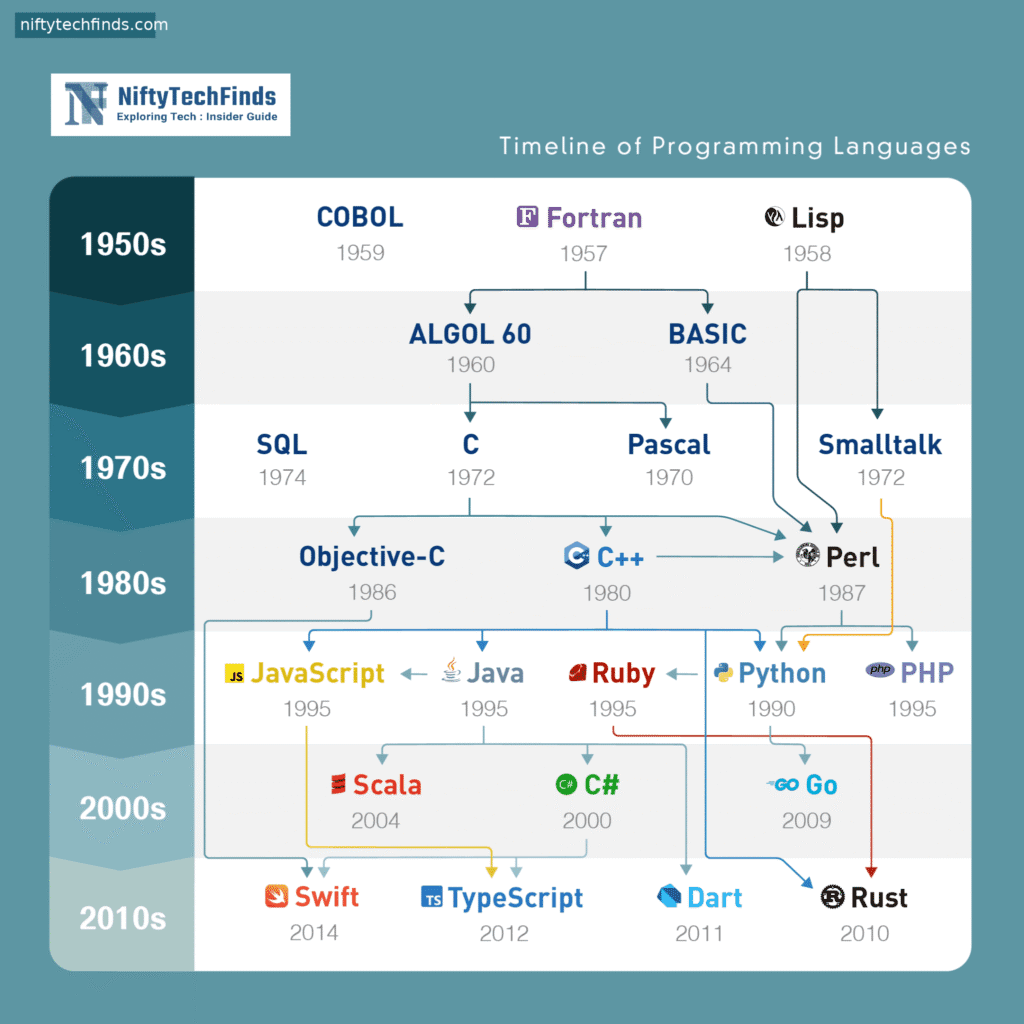

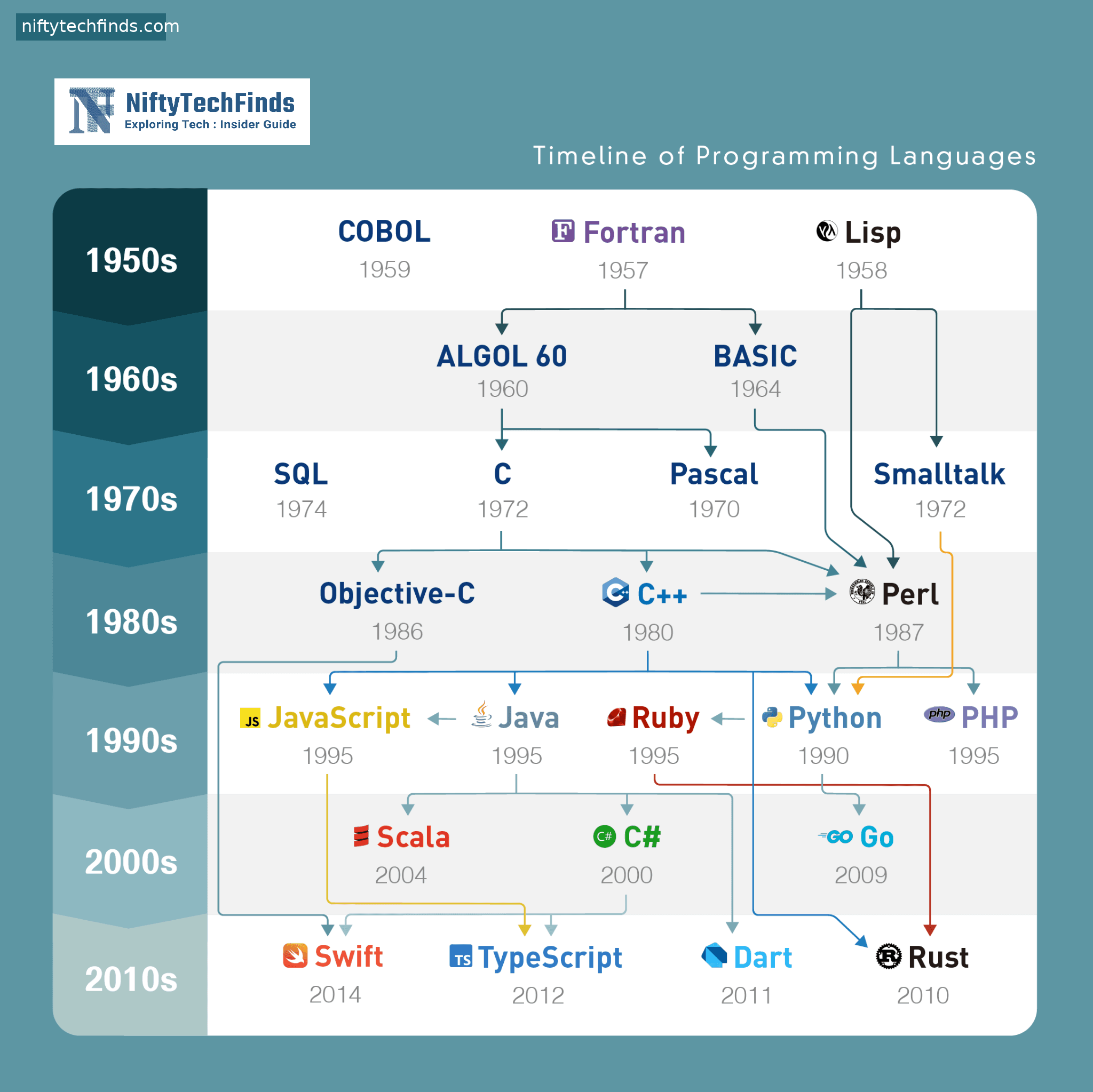

This is the programming language history timeline. It’s a journey that starts over a century ago, long before the computers we know today existed. It’s a story about building bigger, faster, and smarter machines. Whether you are a coding expert or just curious about the technology that shapes our world, this guide is for you. We will travel through time, meet the creators of these languages, and understand why they were invented. We will keep the language simple and clear, so everyone, no matter their English level, can enjoy this amazing adventure into the heart of technology.

Let’s begin our journey and discover the evolution of programming languages from start to finish.

The Ancestors: Before Digital Computers (1840s – 1940s)

Before we had glowing screens and microchips, the dream of a programmable machine already existed. This “pre-history” era is where the very first ideas of programming were born, written on paper long before they could be executed by a machine.

Ada Lovelace & The First Algorithm (1843)

The story of programming begins not with a man in a lab coat, but with a woman of nobility in Victorian England. Ada Lovelace, a gifted mathematician and the daughter of the poet Lord Byron, is widely considered the world’s first computer programmer.

She worked with Charles Babbage, the inventor of a conceptual machine called the “Analytical Engine.” While Babbage focused on the hardware—the gears and cogs of his giant calculator—Lovelace saw its true potential. She realized it could do more than just calculate numbers. It could follow a series of instructions to perform complex tasks.

In her notes, Lovelace wrote what is now recognized as the first-ever algorithm intended to be processed by a machine. It was a detailed plan to calculate a sequence of Bernoulli numbers. She imagined a machine that could create music or art, a vision that was over a century ahead of its time.

- Who invented the first programming language? While not a “language” in the modern sense, Ada Lovelace’s algorithm was the first set of detailed instructions written for a computing machine, making her the conceptual founder of programming.

Plankalkül: The First “High-Level” Language on Paper (1945)

Fast forward a century to Germany in the 1940s. Konrad Zuse, an engineer, was building some of the first electromechanical computers, like the Z1 and Z3. Working in isolation during World War II, he faced a problem: telling his machines what to do was incredibly difficult. He needed a better way to express his ideas.

Between 1942 and 1945, he designed Plankalkül (meaning “Plan Calculus”). It was a remarkably advanced language for its time. It had features like arrays and records that would not become common for decades. Zuse designed it to solve complex engineering problems. However, due to the war and its aftermath, Plankalkül was never implemented in his lifetime. It remained a brilliant idea on paper, a glimpse into the future of code.

Bold Takeaway: The idea of programming is older than computers themselves. It started with visionaries who saw that machines could be instructed to perform complex, creative tasks.

The Dawn of Modern Computing: The First Compilers (1950s – 1960s)

The 1950s was the Big Bang for programming languages. For the first time, computers were becoming a reality in universities and large corporations. Programmers were writing code in “assembly language,” which was very close to the machine’s own language of 1s and 0s. It was slow, difficult, and a single mistake could ruin everything. A revolution was needed.

FORTRAN: The Pioneer for Scientists and Engineers (1957)

The problem was clear: programming was a bottleneck. It took too long and required too much specialized knowledge. A team at IBM, led by John Backus, decided to solve this. Their goal was to create a language that was closer to human mathematical notation.

The result was FORTRAN (FORmula TRANslation). Released in 1957, it was the first widely used high-level programming language. For the first time, scientists and engineers could write equations like X = A + B and a program called a “compiler” would translate it into machine code.

Initially, many programmers were skeptical. They believed their hand-written assembly code would always be faster. But the IBM team focused on creating a compiler that produced highly efficient code. They succeeded, and FORTRAN became a massive hit. It opened the doors of programming to a whole new group of people and is still used today in high-performance computing and for benchmarking the world’s fastest supercomputers.

COBOL: The Language of Business (1959)

While FORTRAN was conquering the scientific world, the business world had different needs. Businesses dealt with payroll, inventory, and customer records—data, not complex formulas. They needed a language that was readable, stable, and could manage large amounts of data.

This effort was spearheaded by a committee, including the influential computer scientist Grace Hopper. Hopper believed that programming languages should be closer to English, so that managers could understand what the programs were doing. This philosophy led to COBOL (COmmon Business-Oriented Language).

COBOL code is famously verbose, using English-like sentences. For example: MULTIPLY HOURLY-RATE BY HOURS-WORKED GIVING GROSS-PAY. While modern programmers sometimes joke about its style, COBOL was a revolution for business. It was designed to be platform-independent, meaning a COBOL program could run on different types of computers, a groundbreaking idea at the time.

- User Base and Legacy: For decades, COBOL was the most widely used programming language in the world. It powered the global financial system, government agencies, and large corporations. Even today, billions of lines of COBOL code are still in active use, quietly running our world.

LISP: The Language of Artificial Intelligence (1958)

At the same time, another powerful idea was emerging in the academic world: Artificial Intelligence. At MIT, John McCarthy designed LISP (LISt Processing) to meet the unique needs of AI research.

Unlike FORTRAN or COBOL, LISP was not about numbers or business records. It was about symbols and ideas. Its core data structure is the “list,” which can hold other lists, allowing for incredibly flexible and powerful programs. A unique feature of LISP is that the code itself is written as lists. This property, known as “homoiconicity,” means that a LISP program can write and modify other LISP programs, a feature that is perfect for AI research. LISP and its dialects are still influential in fields like AI and computer science theory.

Bold Takeaway: The first generation of languages was created to solve specific problems: FORTRAN for science, COBOL for business, and LISP for AI. Their success proved the power of high-level, human-readable code.

The Rise of Structure: Discipline and Power (1970s)

The 1960s had been a wild, creative time for programming. But this creativity led to a problem. As programs grew larger, they became messy and difficult to manage, a situation often called “spaghetti code.” The 1970s was about bringing discipline and structure to programming, making code more reliable, reusable, and easier to understand.

Pascal: The Language for Good Habits (1970)

Niklaus Wirth, a Swiss computer scientist, believed that languages should encourage programmers to write clean, well-structured code. He created Pascal as a teaching language. It forced programmers to be organized and clear.

Pascal was strongly typed, meaning you had to declare the type of every variable (e.g., number, character), which helped catch errors early. It had a clean syntax and promoted a style called “structured programming,” which broke large programs down into smaller, manageable procedures and functions. For many years, Pascal was the primary language taught in computer science programs around the world.

C: The Foundation of Modern Systems (1972)

While Pascal was in the classroom, something far more influential was being built at Bell Labs. Dennis Ritchie, along with his colleague Ken Thompson, was building the UNIX operating system. They initially used assembly language, but it was too slow and tied to one specific type of computer. They needed a language that was high-level enough to be productive but low-level enough to control hardware directly.

Ritchie created C. It took the structured programming ideas from languages like Pascal but gave the programmer much more power and flexibility. C was like a sharp knife: powerful, precise, and a bit dangerous if you were not careful.

The real breakthrough came when Ritchie and Thompson rewrote the UNIX kernel in C. This meant the entire operating system could be “ported” (moved) to different computers simply by recompiling the C code. This was revolutionary. It decoupled software from hardware and led to the explosion of UNIX and its descendants, including Linux and macOS.

- User Base and Impact: C became the language of choice for systems programming: operating systems, compilers, databases, and network drivers. Almost every major language that came after it, including C++, C#, Java, and Python, was either written in C or heavily influenced by its syntax. The most popular programming languages by year lists consistently show C in the top tier for over 40 years.

Smalltalk: The Birth of the Graphical World (1972)

While C was conquering systems programming, a team at Xerox PARC led by Alan Kay had a completely different vision for the future of computing. They imagined a world of personal computers with graphical user interfaces (GUIs)—windows, icons, menus, and a mouse.

To build this world, they created Smalltalk. Smalltalk introduced a powerful new paradigm: Object-Oriented Programming (OOP). In OOP, everything is an “object.” An object bundles together data and the functions that can operate on that data. You interact with objects by sending them “messages.”

This was a perfect model for a graphical world. A window could be an object, a button could be an object, a scrollbar could be an object. This organized, modular approach made building complex graphical systems possible. The work done with Smalltalk at Xerox PARC directly inspired the Apple Lisa and Macintosh, and later, Microsoft Windows.

The Object-Oriented Revolution and the Web’s First Steps (1980s-1990s)

The 1980s saw the ideas of Object-Oriented Programming (OOP) go mainstream. Programmers realized that as software became more complex, OOP was a better way to manage that complexity. The 1990s was defined by one thing: the birth of the World Wide Web, which created a need for a whole new generation of languages.

C++: C with Classes (1985)

Bjarne Stroustrup, a Danish computer scientist at Bell Labs, loved the power and performance of C, but he missed the organizational features of object-oriented languages like Simula. He decided to create a language that had the best of both worlds.

He called it C++ (“C plus plus,” a clever programming joke meaning “increment C”). C++ was a superset of C. Any valid C program was also a valid C++ program. But it added powerful OOP features like “classes,” which are blueprints for creating objects.

- Timeline of object-oriented programming languages: While Smalltalk introduced many core OOP concepts, C++ was the language that brought OOP to the masses. It was fast, powerful, and backward-compatible with the vast amount of existing C code. It became the dominant language for high-performance applications, including video games, financial trading systems, and scientific simulations, a position it still holds.

Python: Programming for Everyone (1991)

In the late 1980s, Guido van Rossum, a Dutch programmer, was looking for a hobby project to keep him busy over the Christmas holidays. He wanted to create a scripting language that was powerful but also easy to read and fun to use. He named it Python, after the British comedy group Monty Python.

Python’s philosophy was different. It emphasized code readability and simplicity. Its syntax is clean and uncluttered, making it easy for beginners to learn. But underneath that simplicity is a very powerful language. Python came with a large standard library, often described as “batteries included,” meaning you could do a lot without needing to find external code.

- User Base Growth: For its first decade, Python had a small but dedicated following. Its explosive growth began in the 2000s. Its ease of use made it a favorite in web development (with frameworks like Django) and system administration. More recently, its powerful data analysis and machine learning libraries (like NumPy, Pandas, and TensorFlow) have made it the undisputed king of data science and AI, catapulting it to the #1 spot on many popularity indexes like the TIOBE index.

You might also like our post on Top Opensource LLM

Java and JavaScript: The Languages of the Web (1995)

In the mid-1990s, the internet changed everything. Suddenly, computers were connected, and this created new challenges and opportunities. Two languages, created in the same year but with very different purposes, would come to define the web era.

Java (1995): At Sun Microsystems, a team led by James Gosling was initially developing a language for interactive television set-top boxes. The project wasn’t a commercial success, but they had created a remarkable language. It was object-oriented, heavily influenced by C++, but simpler and safer. Its killer feature was the Java Virtual Machine (JVM). The JVM was a piece of software that acted as a computer-within-a-computer. You could compile your Java code once, and it would run on any device that had a JVM. Their slogan was “Write Once, Run Anywhere.”

This was the perfect feature for the internet. Developers could write a Java application and it would run on Windows, Mac, or Linux. Java quickly became the dominant language for large-scale enterprise applications, web backends, and it later became the primary language for Android app development. Its user base exploded, making it one of the most popular languages of all time.

JavaScript (1995): While Java was for the “server-side” (the powerful computers running websites), there was a need to make web pages themselves more interactive. Brendan Eich at Netscape was given a monumental task: create a simple scripting language for the browser in just 10 days.

The result was JavaScript. Despite its similar name, it has no direct relationship with Java. It was a simpler, more flexible language designed to manipulate web page elements. For many years, JavaScript was seen as a “toy” language for creating simple animations. But as web applications became more complex (think Google Maps or Gmail), JavaScript’s importance soared. With the creation of powerful frameworks like React, Angular, and Vue.js, and server-side platforms like Node.js, JavaScript has become the language of the modern web, capable of building both the front-end (what you see) and the back-end (the logic behind it).

Bold Takeaway: The 1990s were defined by the web. Java’s platform independence made it perfect for enterprise back-ends, while JavaScript brought interactivity to the browser. Python’s simplicity was quietly building a community that would later dominate the world of data.

The Modern Era: Specialization and Safety (2000s – Present)

The 21st century has seen an explosion of new languages. The one-size-fits-all approach is less common. Modern languages are often designed to solve specific problems better than their predecessors, with a strong focus on developer productivity, security, and performance in a world of multi-core processors and mobile devices.

C#: Microsoft’s Answer to Java (2000)

In the late 90s, Microsoft was in a battle for dominance with Sun Microsystems and Java. In response, they created their own powerful, object-oriented language. Led by the legendary Anders Hejlsberg (who had previously created Turbo Pascal and Delphi), they developed C# (pronounced “C Sharp”).

C# is conceptually very similar to Java. It is object-oriented, strongly-typed, and runs on a managed runtime (.NET Framework, now just .NET). It was designed to make developing applications for Windows easy and fast. Over the years, C# has grown far beyond Windows. With .NET Core (and now .NET 5+), it is fully cross-platform. It is widely used for building web services, enterprise applications, and is the primary language for the popular Unity game engine.

Go: Simplicity at Google Scale (2009)

At Google, engineers were dealing with massive codebases and a need for high-performance networking and concurrency (doing many things at once). Languages like C++ were fast but complex, and compilation times were very slow. Languages like Python were easy to use but not always fast enough.

A team of computing legends, including Ken Thompson (co-creator of C and Unix) and Rob Pike, decided to create a new language. The result was Go. Go’s philosophy is radical simplicity. It has a very small feature set, which makes it easy to learn and read. Its standout feature is its built-in support for concurrency through “goroutines” and “channels,” making it incredibly easy to write programs that can do thousands of things simultaneously. It compiles extremely fast and produces a single, self-contained executable file, making deployment simple. Go has become very popular for cloud computing, microservices, and networking tools.

Swift: A Modern Language for Apple (2014)

For decades, programming for Apple’s platforms (macOS and iOS) was done in a language called Objective-C. Objective-C was powerful but was based on C and Smalltalk and had a syntax that many modern programmers found strange.

In 2014, Apple surprised the world by announcing Swift, a brand new language to replace Objective-C. Developed by a team led by Chris Lattner, Swift is a modern, fast, and, most importantly, safe language. It has features designed to prevent common programming errors. Its syntax is clean and expressive. Since its launch, Swift has seen rapid adoption and is now the primary language for building applications across the entire Apple ecosystem: iOS, iPadOS, macOS, watchOS, and tvOS.

Rust: Performance with a Safety Guarantee (2015)

For high-performance systems programming, C and C++ have been the kings for decades. But they have a weakness: memory safety. A huge number of bugs and security vulnerabilities in software are caused by mistakes in managing memory.

Graydon Hoare, a developer at Mozilla, started Rust as a personal project to solve this problem. Rust is a systems language that is as fast as C++ but provides a guarantee of memory safety without needing a garbage collector (an automatic memory manager that can sometimes pause a program). It achieves this through a unique “ownership” and “borrowing” system that is checked by the compiler. If your Rust program compiles, you can be confident that it is free from a whole class of common bugs. This makes Rust a revolutionary choice for building operating systems, web browsers, game engines, and other software where performance and reliability are critical.

The Rise of Superset Languages: TypeScript and Kotlin

Not all modern languages are built from scratch. Two of the most popular recent languages are built on top of existing ones:

- TypeScript (2012): A superset of JavaScript developed by Microsoft. It adds a static type system to JavaScript. This allows developers to catch errors before the code even runs, making it easier to build and maintain large, complex JavaScript applications.

- Kotlin (2016): Developed by JetBrains, Kotlin is a modern, pragmatic language that is fully interoperable with Java and runs on the JVM. In 2019, Google announced it was the preferred language for Android development. It offers a more concise and safer syntax than Java, which has led to its rapid adoption.

Bold Takeaway: Modern language design is about providing the right tool for the job. Whether it’s Go for cloud simplicity, Swift for Apple’s ecosystem, or Rust for guaranteed safety, today’s programmers have a rich and diverse toolkit to choose from.

The Future of Programming: What’s Next?

The programming language history timeline is a story of continuous evolution. What does the future hold? We are seeing a few clear trends:

- AI and Code Generation: AI tools like GitHub Copilot are already changing how developers write code. In the future, we may describe what we want our program to do in natural language, and an AI will generate the underlying code in the most suitable programming language.

- Quantum Computing Languages: As quantum computers become a reality, we will need new languages to control their unique properties. Languages like Q# (from Microsoft) and Qiskit (from IBM) are already pioneering this new frontier.

- Increased Focus on Safety and Simplicity: As software becomes more integrated into every aspect of our lives, the cost of bugs and security flaws grows. We can expect future languages to have even stronger features for preventing errors, following the path laid by languages like Rust.

The journey from Ada Lovelace’s first algorithm to the complex AI systems of today is one of the greatest intellectual adventures in human history. The next chapter is still being written, and it promises to be just as exciting.

Frequently Asked Questions (FAQ)

Here are answers to some of the most common questions about the history of programming languages.

Q: What was the very first programming language? A: The first conceptual algorithm for a machine was written by Ada Lovelace in 1843 for Charles Babbage’s Analytical Engine. The first high-level programming language to be designed was Plankalkül by Konrad Zuse in the 1940s, though it was not implemented at the time. The first widely used high-level language with a compiler was FORTRAN, released in 1957.

Q: Who invented the first programming language? A: This question has several answers depending on the definition. Ada Lovelace is considered the first programmer for her theoretical work. Konrad Zuse designed the first complete high-level language, Plankalkül. John Backus and his team at IBM created FORTRAN, the first language to gain widespread commercial use.

Q: What is the most popular programming language? A: As of 2025, Python is consistently ranked as the most popular programming language across various indexes like the TIOBE Index and developer surveys. Its popularity is driven by its versatility, ease of use, and dominance in the rapidly growing fields of data science, machine learning, and AI. JavaScript, Java, C++, and C# also remain extremely popular.

Q: Is HTML a programming language? A: No, HTML (HyperText Markup Language) is not considered a programming language. It is a markup language. Its purpose is to describe the structure and content of a web page (e.g., “this is a heading,” “this is a paragraph”). It does not contain logic, loops, or algorithms, which are the hallmarks of a true programming language.

Q: What problem did the first programming language solve? A: The first widely used programming language, FORTRAN, was created to solve the problem of speed and complexity in scientific and engineering calculations. Before FORTRAN, programming was done in low-level assembly language, which was extremely time-consuming and error-prone. FORTRAN allowed scientists to write mathematical formulas in a more natural way, dramatically increasing their productivity and making computers accessible to a wider audience of technical experts.

Q: Why are there so many programming languages? A: There are many programming languages for the same reason there are many types of tools in a toolbox. Different languages are designed to solve different problems well. Some languages, like C, are designed for low-level system control. Others, like Python, are designed for rapid development and data analysis. New languages are created to improve on older ones, introduce new programming paradigms (like Rust’s safety features), or target new platforms (like Swift for iOS).

I appreciate how you address the systemic aspects of topic. Too many discussions focus on individual tactics without considering the broader system dynamics you highlight here.