For years, AI had a major limitation: it forgot everything.

Every conversation reset. Every task started from zero.

In 2026, that limitation is disappearing.

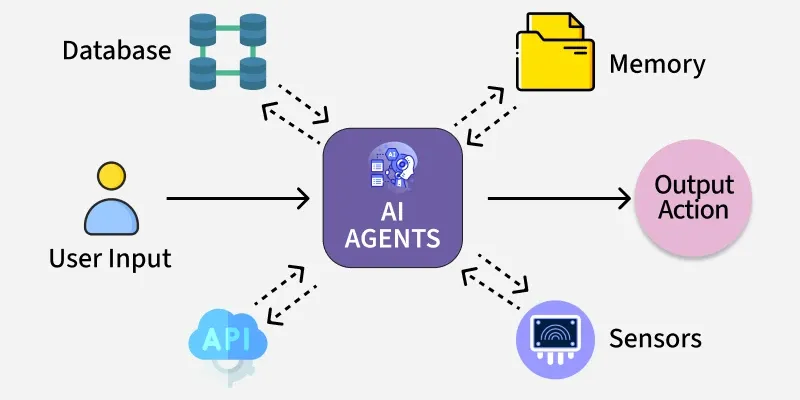

Modern AI agents now have:

- Persistent memory

- Access to emails, files, calendars, browsers

- Permission to act on your behalf

That means your AI agent can know more about you than any human ever has.

And that raises a serious question:

What happens when your AI agent knows everything?

This article explains AI agent security and privacy in simple terms, without fear-mongering — but without sugarcoating the risks either.

What Makes AI Agent Security Different From Traditional AI Security?

Traditional AI (Pre-Agent Era)

- Stateless

- Short-term memory

- Limited access

- Human-in-the-loop by default

Agentic AI (2026 Reality)

- Long-term memory

- Tool access (email, CRM, cloud drives)

- Autonomous decision-making

- Continuous operation

👉 Security is no longer just about models — it’s about behavior.

Why AI Agent Privacy Risks Are Fundamentally New

AI agents introduce three new privacy dimensions:

1. Persistent Memory

Your agent remembers:

- Preferences

- Conversations

- Business data

- Personal habits

Unlike humans, it never forgets unless designed to.

2. Cross-Tool Visibility

AI agents can see:

- Your email

- Your calendar

- Your documents

- Your browsing history

This creates a single point of data concentration — a major privacy risk.

3. Autonomous Action

Agents don’t just know — they do:

- Send messages

- Schedule meetings

- Make purchases

- Trigger workflows

A mistake is no longer just informational — it becomes operational.

What Data Do AI Agents Actually Have Access To?

Depending on permissions, an AI agent may access:

- Email inboxes

- Messaging apps

- Cloud storage

- CRM systems

- Financial dashboards

- Internal documents

- Browsers and APIs

Platforms from OpenAI, Google, Microsoft, and Anthropic are actively building permissioned agent systems to manage this risk.

The Biggest AI Agent Security Risks (Explained Simply)

1. Over Permissioned Agents

Most users click “Allow All”.

That means:

- Too much access

- Too little oversight

Principle of least privilege is often ignored — dangerously.

2. Prompt Injection & Agent Manipulation

Attackers can:

- Trick agents via emails

- Embed malicious instructions in documents

- Hijack workflows indirectly

This is one of the fastest-growing AI agent security threats.

3. Memory Poisoning

If an agent stores incorrect or malicious data in long-term memory, it may:

- Repeat false information

- Make bad decisions

- Automate harmful actions

Memory becomes an attack surface.

4. Data Leakage Through Actions

Even if data is not “leaked,” an agent might:

- Reference sensitive info in replies

- Use private context in public outputs

- Accidentally expose trade secrets

5. Vendor & Platform Risk

If your agent runs on third-party infrastructure:

- Who owns the data?

- Who can audit behavior?

- Who is liable when something goes wrong?

These questions are still legally unresolved.

AI Agent Privacy vs Convenience: The Real Trade-Off

| Convenience | Privacy Risk |

|---|---|

| Auto-email replies | Email content exposure |

| Smart scheduling | Calendar metadata |

| Personalized suggestions | Behavioral profiling |

| Autonomous purchasing | Financial access |

| Long-term memory | Identity reconstruction |

👉 The smarter the agent, the more sensitive the data.

How AI Agent Security Is Being Designed in 2026

Leading platforms are implementing:

✔ Permission Scopes

Agents only access what they need.

✔ Action Sandboxing

High-risk actions require approval.

✔ Memory Controls

- Expiring memory

- Editable memory

- Memory visibility

✔ Audit Logs

Every action is recorded and reviewable.

✔ Agent Identity Separation

Different agents for different roles.

Best Practices: How to Protect Yourself (Non-Technical)

1. Treat Your AI Agent Like a Human Employee

- Don’t give blanket access

- Limit financial authority

- Review actions regularly

2. Separate Personal and Work Agents

Never let one agent handle everything.

3. Use Human Approval for Critical Actions

Especially:

- Payments

- External communication

- Publishing

4. Review Agent Memory Monthly

Delete outdated or sensitive entries.

5. Prefer Platforms With Transparency

Look for:

- Clear data policies

- Memory controls

- Export/delete options

Will Regulations Catch Up to AI Agents?

Governments are beginning to respond.

Expect:

- AI agent audit requirements

- Consent-based memory rules

- Agent action liability laws

- Data residency enforcement

The EU, US, and Asia-Pacific regions are already drafting agent-specific AI policies, inspired by earlier frameworks like GDPR — but adapted for autonomous systems.

The Ethical Question No One Is Ready to Answer

If your AI agent:

- Knows your secrets

- Understands your behavior

- Acts in your name

Then ask yourself:

Is it a tool — or a digital extension of you?

And if something goes wrong…

👉 Who is responsible?

Frequently Asked Questions (FAQ)

Q: Can AI agents spy on me?

A: Not intentionally — but poor permission management can expose data.

Q: Is AI agent memory permanent?

A: It depends on the platform. Many now offer editable or expiring memory.

Q: Should I trust AI agents with financial access?

A: Only with strict limits and human approval.

Q: Are AI agents safer than humans with data?

A: They are more consistent — but mistakes scale faster.

Q: What’s the safest way to use AI agents today?

A: Narrow scope, clear goals, frequent review.

Final Thought

AI agents don’t just assist you.

They observe, remember, decide, and act.

The future won’t be decided by how powerful AI agents become —

but by how carefully we control what they know and what they’re allowed to do.

👉 Would you trust an AI agent with your full digital life?

👉 Where should the line between convenience and privacy be drawn?

Let’s have that conversation — before the agents make the decisions for us.